0-背景:

本文采用正则化的方式处理神经网络过程中的过拟合问题。

0-1预先的环境要求:

# import packages

import numpy as np

import matplotlib.pyplot as plt

from reg_utils import sigmoid,relu,plot_decision_boundary,initialize_parameters,load_2D_dataset,predict_dec

from reg_utils import compute_cost,predict,forward_propagation,backward_propagation,update_parameters

import sklearn

import sklearn.datasets

import scipy.io

from testCases import *

%matplotlib inline

plt.rcParams['figure.figsize'] = (7.0,4.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

0-2 问题描述:

现有一组2D的数据集,记录法国球队守门员用脚发球后,被其他队员用头部获取的球的数据集。

数据加载:

train_X,train_Y,test_X,test_Y = load_2D_dataset()

其中蓝点表示法国球队队员头部获取到球,红点表示对方头部获取到了球,sad。

所以,我们的目标是用深度学习的模型找出守门员在场上应该往哪个地方发球。

本文将尝试从非正则化模型,正则化模型和dropout模型分别说明。

1- 非正则化模型

1-1 模型概览

本文介绍的正则化模型包括L2正则和dropout正则模型。

对于L2正则化模型,需要用到的参数是lambd值。该正则化模型需要用到compute_cost_with_regularization() 和backward_propagation_with_regularization()

而对于dropout模型则需要设置keep_prob值作为神经元节点的概率开关。需要用到的函数是forward_propagation_with_dropout()和backward_propagation_with_dropout(),具体代码实现在后面介绍。

def model(X,Y,learning_rate = 0.3,num_iterations = 30000,print_cost = True,lambd = 0,keep_prob = 1):

""" Implements a three-layer neural network: LINEAR->RELU->LINEAR->RELU->LINEAR->SIGMOID. Arguments: X -- input data,of shape (input size,number of examples) Y -- true "label" vector (1 for blue dot / 0 for red dot),of shape (output size,number of examples) learning_rate -- learning rate of the optimization num_iterations -- number of iterations of the optimization loop print_cost -- If True,print the cost every 10000 iterations lambd -- regularization hyperparameter,scalar keep_prob - probability of keeping a neuron active during drop-out,scalar. Returns: parameters -- parameters learned by the model. They can then be used to predict. """

print("learning_rate=",learning_rate)

print("num_iterations=",num_iterations)

print("print_cost=",print_cost)

print("lambd=",lambd)

print("keep_prob=",keep_prob)

grads = {}

costs = [] # to keep track of the cost

m = X.shape[1] # number of examples

layers_dims = [X.shape[0],20,3,1]

# Initialize parameters dictionary.

parameters = initialize_parameters(layers_dims)

# Loop (gradient descent)

for i in range(0,num_iterations):

# Forward propagation: LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOID.

if keep_prob == 1:

a3,cache = forward_propagation(X,parameters)

elif keep_prob < 1:

a3,cache = forward_propagation_with_dropout(X,parameters,keep_prob)

# Cost function

if lambd == 0:

cost = compute_cost(a3,Y)

else:

cost = compute_cost_with_regularization(a3,lambd)

# Backward propagation.

assert(lambd==0 or keep_prob==1)# it is possible to use both L2 regularization and dropout,

# but this assignment will only explore one at a time,所以这个语句是要确保一个失效,不同时使用

#如果想要同时都支持,需要设置一个新的函数

if lambd == 0 and keep_prob == 1:

grads = backward_propagation(X,cache)

elif lambd != 0:

grads = backward_propagation_with_regularization(X,cache,lambd)

elif keep_prob < 1:

grads = backward_propagation_with_dropout(X,keep_prob)

# Update parameters.

parameters = update_parameters(parameters,grads,learning_rate)

# Print the loss every 10000 iterations

if print_cost and i % 10000 == 0:

print("Cost after iteration {}: {}".format(i,cost))

if print_cost and i % 1000 == 0:

costs.append(cost)

# plot the cost

plt.plot(costs)

plt.ylabel('cost')

plt.xlabel('iterations (x1,000)')

plt.title("Learning rate =" + str(learning_rate))

plt.show()

return parameters

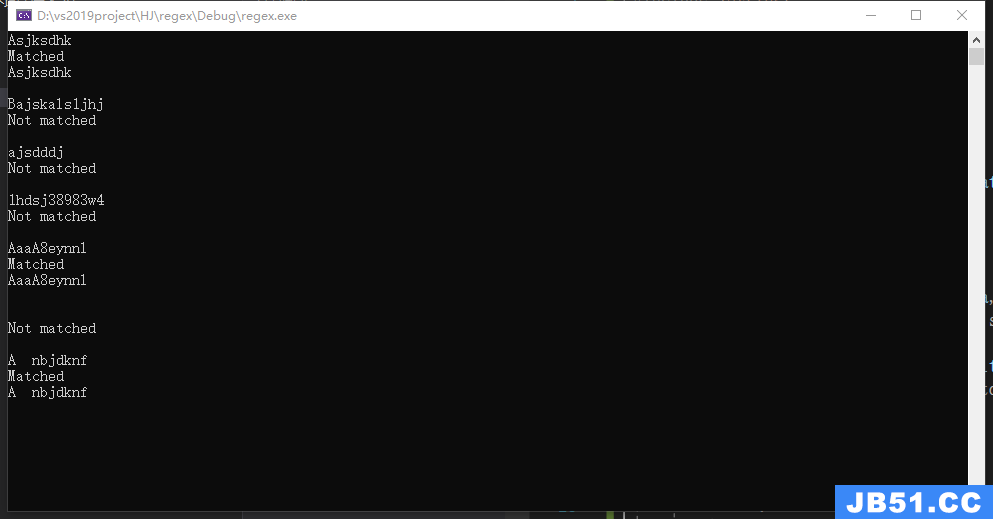

1-2 非正则化模型

非正则化模型:

parameters = model(train_X,train_Y)

print ("On the training set:") predictions_train = predict(train_X,train_Y,parameters) print ("On the test set:") predictions_test = predict(test_X,test_Y,parameters)

运行结果:

Cost after iteration 0: 0.6557412523481002

Cost after iteration 10000: 0.1632998752572419

Cost after iteration 20000: 0.13851642423239133

代价函数曲线图如下:

训练集和测试集的准确率:

On the training set:

Accuracy: 0.947867298578

On the test set:

Accuracy: 0.915

上述模型作为一个基准模型,其训练数据集的准确率为94.8%,测试集的准确率为91.5%。该模型的边界绘制如下:

plt.title("Model without regularization")

axes = plt.gca()

axes.set_xlim([-0.75,0.40])

axes.set_ylim([-0.75,0.65])

plot_decision_boundary(lambda x: predict_dec(parameters,x.T),train_X,train_Y)

从上图边界对于噪点的拟合可以看出,存在过拟合,所以我们采用以下的两种方式降低过拟合。

2- L2正则化

To:

所以,我们定义 compute_cost_with_regularization()函数表示公式 (2)的代价函数。

np.sum(np.square(Wl))

由于我们有三个W参数

代码实现:

# GRADED FUNCTION: compute_cost_with_regularization

def compute_cost_with_regularization(A3,lambd):

""" Implement the cost function with L2 regularization. See formula (2) above. Arguments: A3 -- post-activation,output of forward propagation,number of examples) Y -- "true" labels vector,number of examples) parameters -- python dictionary containing parameters of the model Returns: cost - value of the regularized loss function (formula (2)) """

m = Y.shape[1]

W1 = parameters["W1"]

W2 = parameters["W2"]

W3 = parameters["W3"]

cross_entropy_cost = compute_cost(A3,Y) # This gives you the cross-entropy part of the cost

### START CODE HERE ### (approx. 1 line)

L2_regularization_cost = (np.sum(np.square(W1)) + np.sum(np.square(W2)) + np.sum(np.square(W3)))*lambd/(2*m)

### END CODER HERE ###

cost = cross_entropy_cost + L2_regularization_cost

return cost

测试上述模型:

A3,Y_assess,parameters = compute_cost_with_regularization_test_case()

print("cost = " + str(compute_cost_with_regularization(A3,lambd = 0.1)))

运行后,得出:

cost = 1.78648594516

由于我们修改了代价函数,所以后向传播也需要做修改。同理,我们也仅仅需要修改dW1,dW2 and dW3。对于这三者,每一项都需要加入正则项的梯度(

代码实现:

# GRADED FUNCTION: backward_propagation_with_regularization

def backward_propagation_with_regularization(X,lambd):

""" Implements the backward propagation of our baseline model to which we added an L2 regularization. Arguments: X -- input dataset,number of examples) cache -- cache output from forward_propagation() lambd -- regularization hyperparameter,scalar Returns: gradients -- A dictionary with the gradients with respect to each parameter,activation and pre-activation variables """

m = X.shape[1]

(Z1,A1,W1,b1,Z2,A2,W2,b2,Z3,A3,W3,b3) = cache

dZ3 = A3 - Y

### START CODE HERE ### (approx. 1 line)

dW3 = 1./m * np.dot(dZ3,A2.T) + lambd*W3/m

### END CODE HERE ###

db3 = 1./m * np.sum(dZ3,axis=1,keepdims = True)

dA2 = np.dot(W3.T,dZ3)

dZ2 = np.multiply(dA2,np.int64(A2 > 0))

### START CODE HERE ### (approx. 1 line)

dW2 = 1./m * np.dot(dZ2,A1.T) + lambd*W2/m

### END CODE HERE ###

db2 = 1./m * np.sum(dZ2,keepdims = True)

dA1 = np.dot(W2.T,dZ2)

dZ1 = np.multiply(dA1,np.int64(A1 > 0))

### START CODE HERE ### (approx. 1 line)

dW1 = 1./m * np.dot(dZ1,X.T) + lambd*W1/m

### END CODE HERE ###

db1 = 1./m * np.sum(dZ1,keepdims = True)

gradients = {"dZ3": dZ3,"dW3": dW3,"db3": db3,"dA2": dA2,"dZ2": dZ2,"dW2": dW2,"db2": db2,"dA1": dA1,"dZ1": dZ1,"dW1": dW1,"db1": db1}

return gradients

测试代码:

X_assess,Y_assess,cache = backward_propagation_with_regularization_test_case()

grads = backward_propagation_with_regularization(X_assess,lambd = 0.7)

print ("dW1 = "+ str(grads["dW1"])) print ("dW2 = "+ str(grads["dW2"])) print ("dW3 = "+ str(grads["dW3"]))

运行结果如下:

dW1 = [[-0.25604646 0.12298827 -0.28297129] [-0.17706303 0.34536094 -0.4410571 ]]

dW2 = [[ 0.79276486 0.85133918] [-0.0957219 -0.01720463] [-0.13100772 -0.03750433]]

dW3 = [[-1.77691347 -0.11832879 -0.09397446]]

在此基础上,我们可以设置

parameters = model(train_X,lambd = 0.7)

print ("On the train set:") predictions_train = predict(train_X,parameters)

运行结果如下:

Cost after iteration 0: 0.6974484493131264

Cost after iteration 10000: 0.2684918873282239

Cost after iteration 20000: 0.2680916337127301

代价曲线如下:

准确率:

On the train set:

Accuracy: 0.938388625592

On the test set:

Accuracy: 0.93

目前,我们已经将测试数据集的准确率提升到93%。

边界绘制:

plt.title("Model with L2-regularization")

axes = plt.gca()

axes.set_xlim([-0.75,train_Y)

讨论:

L2正则化是基于较小的权值比较大的权值模型更简单这一假设。通过正则化,我们在代价函数中引入权重的平方值,这导致在反向传播时候,权重变得更小。

3- dropout正则化

这种方式采用的是对于每次的迭代过程都以一定概率删除神经元节点的方式,从而简化模型,减低过拟合影响。在每个迭代过程使用drop-out,相当于我们使用神经元节点的子集来对不同模型进行训练,由于神经元的删除操作,导致神经元对于其他神经元的敏感性降低。

3-1 dropout正则的前向传播

dropout正则的前向传播需以下4步:

-

d[1] 和a[1] 尺寸一致,所以np.random.rand()进行向量的初始化。对于矩阵D[1]=[d[1](1)d[1](2)...d[1](m)] 尺寸和A[1] 一致,初始化方式类似。 - 根据 keep_prob对

D[1] 矩阵做0-1划分。方式如X = (X < 0.5),其实这是产生一个0-1矩阵mask,用于神经元节点的筛选。 -

A[1] toA[1]∗D[1] 进行神经元节点的筛选操作。 -

A[1] /keep_prob,使得前后的期望值一致(inverted dropout)

代码实现:

# GRADED FUNCTION: forward_propagation_with_dropout

def forward_propagation_with_dropout(X,keep_prob = 0.5):

""" Implements the forward propagation: LINEAR -> RELU + DROPOUT -> LINEAR -> RELU + DROPOUT -> LINEAR -> SIGMOID. Arguments: X -- input dataset,of shape (2,number of examples) parameters -- python dictionary containing your parameters "W1","b1","W2","b2","W3","b3": W1 -- weight matrix of shape (20,2) b1 -- bias vector of shape (20,1) W2 -- weight matrix of shape (3,20) b2 -- bias vector of shape (3,1) W3 -- weight matrix of shape (1,3) b3 -- bias vector of shape (1,1) keep_prob - probability of keeping a neuron active during drop-out,scalar Returns: A3 -- last activation value,output of the forward propagation,of shape (1,1) cache -- tuple,information stored for computing the backward propagation """

np.random.seed(1)

# retrieve parameters

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

W3 = parameters["W3"]

b3 = parameters["b3"]

# LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOID

Z1 = np.dot(W1,X) + b1

A1 = relu(Z1)

### START CODE HERE ### (approx. 4 lines) # Steps 1-4 below correspond to the Steps 1-4 described above.

D1 = np.random.rand(A1.shape[0],A1.shape[1]) # Step 1: initialize matrix D1 = np.random.rand(...,...)

D1 = (D1 < keep_prob) # Step 2: convert entries of D1 to 0 or 1 (using keep_prob as the threshold)

A1 = A1 * D1 # Step 3: shut down some neurons of A1

A1 = A1/keep_prob # Step 4: scale the value of neurons that haven't been shut down

### END CODE HERE ###

Z2 = np.dot(W2,A1) + b2

A2 = relu(Z2)

### START CODE HERE ### (approx. 4 lines)

D2 = np.random.rand(A2.shape[0],A2.shape[1]) # Step 1: initialize matrix D2 = np.random.rand(...,...)

D2 = (D2 < keep_prob) # Step 2: convert entries of D2 to 0 or 1 (using keep_prob as the threshold)

A2 = A2 * D2 # Step 3: shut down some neurons of A2

A2 = A2/keep_prob # Step 4: scale the value of neurons that haven't been shut down

### END CODE HERE ###

Z3 = np.dot(W3,A2) + b3

A3 = sigmoid(Z3)

cache = (Z1,D1,D2,b3)

return A3,cache

代码测试:

X_assess,parameters = forward_propagation_with_dropout_test_case()

A3,cache = forward_propagation_with_dropout(X_assess,keep_prob = 0.7)

print ("A3 = " + str(A3))

运行结果:

A3 = [[ 0.36974721 0.00305176 0.04565099 0.49683389 0.36974721]]

3-2 dropout正则的后向传播

主要分为两步:

- 前向传播过程中

A1用到的D[1] ,在后向传播过程将D[1] 应用到dA1即可。 - 前向传播过程 将

A1除以keep_prob,以保持期望值。在后向传播过程dA1也做如此操作,即dA1/keep_prob。这是由于A[1] 被keep_prob进行一定程度放大,则 其导数dA[1] 也需要做同比例的操作。

代码实现:

# GRADED FUNCTION: backward_propagation_with_dropout

def backward_propagation_with_dropout(X,keep_prob):

""" Implements the backward propagation of our baseline model to which we added dropout. Arguments: X -- input dataset,number of examples) cache -- cache output from forward_propagation_with_dropout() keep_prob - probability of keeping a neuron active during drop-out,b3) = cache

dZ3 = A3 - Y

dW3 = 1./m * np.dot(dZ3,A2.T)

db3 = 1./m * np.sum(dZ3,keepdims = True)

dA2 = np.dot(W3.T,dZ3)

### START CODE HERE ### (≈ 2 lines of code)

dA2 = dA2 * D2 # Step 1: Apply mask D2 to shut down the same neurons as during the forward propagation

dA2 = dA2/keep_prob # Step 2: Scale the value of neurons that haven't been shut down

### END CODE HERE ###

dZ2 = np.multiply(dA2,np.int64(A2 > 0))

dW2 = 1./m * np.dot(dZ2,A1.T)

db2 = 1./m * np.sum(dZ2,dZ2)

### START CODE HERE ### (≈ 2 lines of code)

dA1 = dA1 * D1 # Step 1: Apply mask D1 to shut down the same neurons as during the forward propagation

dA1 = dA1/keep_prob # Step 2: Scale the value of neurons that haven't been shut down

### END CODE HERE ###

dZ1 = np.multiply(dA1,np.int64(A1 > 0))

dW1 = 1./m * np.dot(dZ1,X.T)

db1 = 1./m * np.sum(dZ1,cache = backward_propagation_with_dropout_test_case()

gradients = backward_propagation_with_dropout(X_assess,keep_prob = 0.8)

print ("dA1 = " + str(gradients["dA1"])) print ("dA2 = " + str(gradients["dA2"]))

运行结果:

dA1 = [[ 0.36544439 0. -0.00188233 0. -0.17408748] [ 0.65515713 0. -0.00337459 0. -0. ]]

dA2 = [[ 0.58180856 0. -0.00299679 0. -0.27715731] [ 0. 0.53159854 -0. 0.53159854 -0.34089673] [ 0. 0. -0.00292733 0. -0. ]]

下面,我们采用keep_prob = 0.86来做模型运行,在forward_propagation和backward_propagation的计算上,需要用新的函数: forward_propagation_with_dropout和backward_propagation_with_dropout

模型执行:

parameters = model(train_X,keep_prob = 0.86,learning_rate = 0.3)

#(X,Y,print_cost = True,keep_prob = 1

print ("On the train set:") predictions_train = predict(train_X,parameters)

运行结果:

Cost after iteration 0: 0.6543912405149825

Cost after iteration 10000: 0.061016986574905605

Cost after iteration 20000: 0.060582435798513114

代价函数曲线:

准确率:

On the train set:

Accuracy: 0.928909952607

On the test set:

Accuracy: 0.95

该dropout正则化使得测试数据集的准确率提升到了95%,在训练集上没有存在过拟合,且在测试集上表现良好。

边界绘制:

plt.title("Model with dropout")

axes = plt.gca()

axes.set_xlim([-0.75,train_Y)

讨论:

一个常见的错误是,将dropout同时用于训练集和测试集,记住一点:dropout仅仅用于训练集!

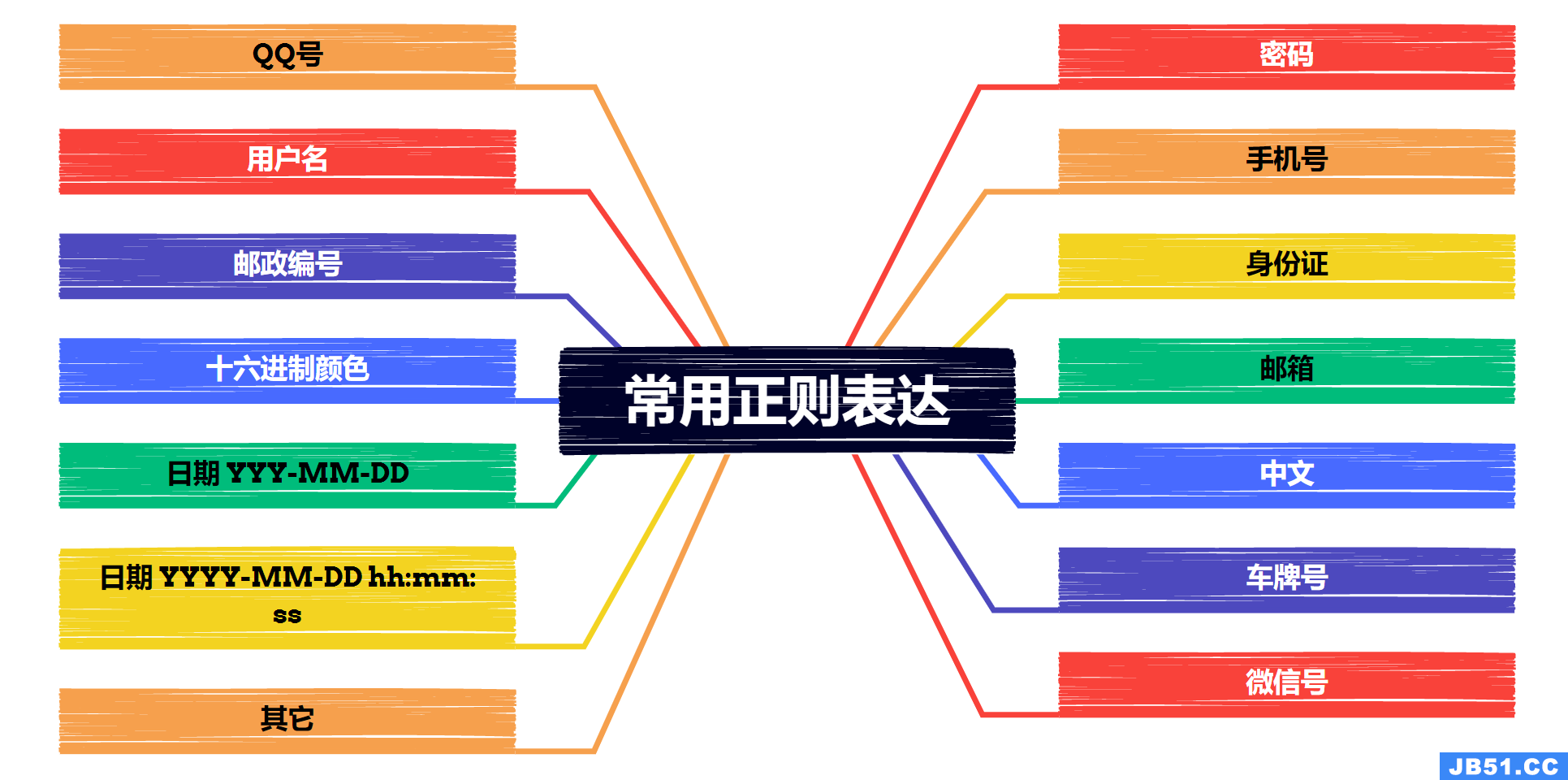

4- 结论

| model | train accuracy | test accuracy |

| 3-layer NN without regularization | 95% | 91.5% |

| 3-layer NN with L2-regularization | 94% | 93% |

| 3-layer NN with dropout | 93% | 95% |

版权声明:本文内容由互联网用户自发贡献,该文观点与技术仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 dio@foxmail.com 举报,一经查实,本站将立刻删除。