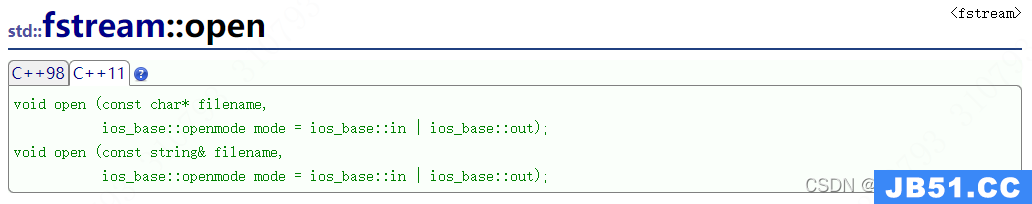

func performRectangleDetection(image: UIKit.CIImage) -> UIKit.CIImage? {

var resultImage: UIKit.CIImage?

let detector:CIDetector = CIDetector(ofType: CIDetectorTypeRectangle,context: nil,options: [CIDetectorAccuracy : CIDetectorAccuracyHigh])

// Get the detections

let features = detector.featuresInImage(image)

for feature in features as! [CIRectangleFeature] {

resultImage = self.drawHighlightOverlayForPoints(image,topLeft: feature.topLeft,topRight: feature.topRight,bottomLeft: feature.bottomLeft,bottomRight: feature.bottomRight)

}

return resultImage

}

func drawHighlightOverlayForPoints(image: UIKit.CIImage,topLeft: CGPoint,topRight: CGPoint,bottomLeft: CGPoint,bottomRight: CGPoint) -> UIKit.CIImage {

var overlay = UIKit.CIImage(color: CIColor(red: 1.0,green: 0.55,blue: 0.0,alpha: 0.45))

overlay = overlay.imageByCroppingToRect(image.extent)

overlay = overlay.imageByApplyingFilter("CIPerspectiveTransformWithExtent",withInputParameters: [

"inputExtent": CIVector(CGRect: image.extent),"inputTopLeft": CIVector(CGPoint: topLeft),"inputTopRight": CIVector(CGPoint: topRight),"inputBottomLeft": CIVector(CGPoint: bottomLeft),"inputBottomRight": CIVector(CGPoint: bottomRight)

])

return overlay.imageByCompositingOverImage(image)

}

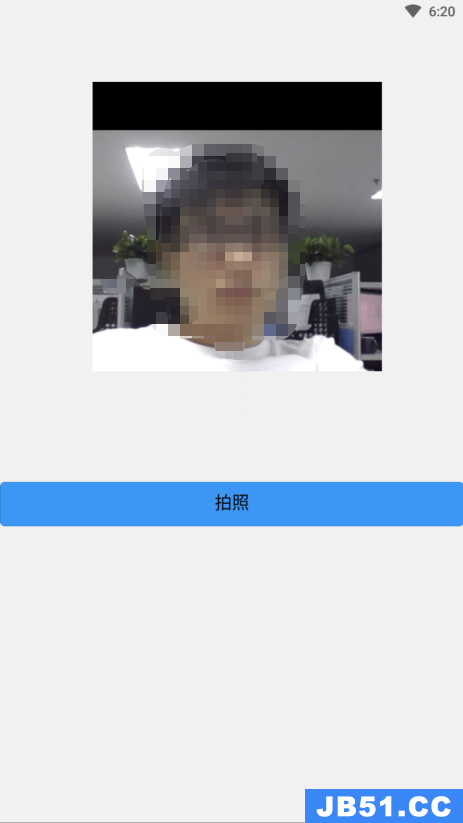

调用performRectangleDetection通过CIImage显示检测到的矩形.

它看起来像上图.我需要使用设置为笔画的UIBezierPath显示相同的红色矩形.我需要这样做,所以用户可以调整检测,以防它不是100%准确.我试图画出一条路,但是一直没有成功.这是我如何绘制路径.我使用一个叫做rect的自定义类来保存4点.这是检测:

func detectRect() -> Rect{

var rect:Rect?

let detector:CIDetector = CIDetector(ofType: CIDetectorTypeRectangle,options: [CIDetectorAccuracy : CIDetectorAccuracyHigh])

// Get the detections

let features = detector.featuresInImage(UIKit.CIImage(image: self)!)

for feature in features as! [CIRectangleFeature] {

rect = Rect(tL: feature.topLeft,tR: feature.topRight,bR: feature.bottomRight,bL: feature.bottomLeft)

}

return rect!

}

接下来,我必须缩放坐标.这是Rect类里面的函数:

func scaleRect(image:UIImage,imageView:UIImageView) ->Rect{

let scaleX = imageView.bounds.width/image.size.width

var tlx = topLeft.x * scaleX

var tly = topLeft.y * scaleX

tlx += (imageView.bounds.width - image.size.width * scaleX) / 2.0

tly += (imageView.bounds.height - image.size.height * scaleX) / 2.0

let tl = CGPointMake(tlx,tly)

var trx = topRight.x * scaleX

var trY = topRight.y * scaleX

trx += (imageView.bounds.width - image.size.width * scaleX) / 2.0

trY += (imageView.bounds.height - image.size.height * scaleX) / 2.0

let tr = CGPointMake(trx,trY)

var brx = bottomRight.x * scaleX

var bry = bottomRight.y * scaleX

brx += (imageView.bounds.width - image.size.width * scaleX) / 2.0

bry += (imageView.bounds.height - image.size.height * scaleX) / 2.0

let br = CGPointMake(brx,bry)

var blx = bottomLeft.x * scaleX

var bly = bottomLeft.y * scaleX

blx += (imageView.bounds.width - image.size.width * scaleX) / 2.0

bly += (imageView.bounds.height - image.size.height * scaleX) / 2.0

let bl = CGPointMake(blx,bly)

let rect = Rect(tL: tl,tR: tr,bR: br,bL: bl)

return rect

}

最后我画的路径:

var tet = image.detectRect() tet = tet.scaleRect(image,imageView: imageView) let shapeLayer = CAShapeLayer() let path = ViewController.drawPath(tet.topLeft,p2: tet.topRight,p3: tet.bottomRight,p4: tet.bottomLeft) shapeLayer.path = path.CGPath shapeLayer.lineWidth = 5 shapeLayer.fillColor = nil shapeLayer.strokeColor = UIColor.orangeColor().CGColor imageView.layer.addSublayer(shapeLayer)

路径已经离开屏幕并且不准确.我知道我必须将坐标从CoreImage坐标调整到UIKit坐标,然后将其缩放为UIImageView.不幸的是,我不知道该怎么做.我知道我可以重用我的一些检测代码来完成这个,但我不知道正确的步骤.任何帮助将不胜感激!谢谢.这是发生的一个例子:

更新

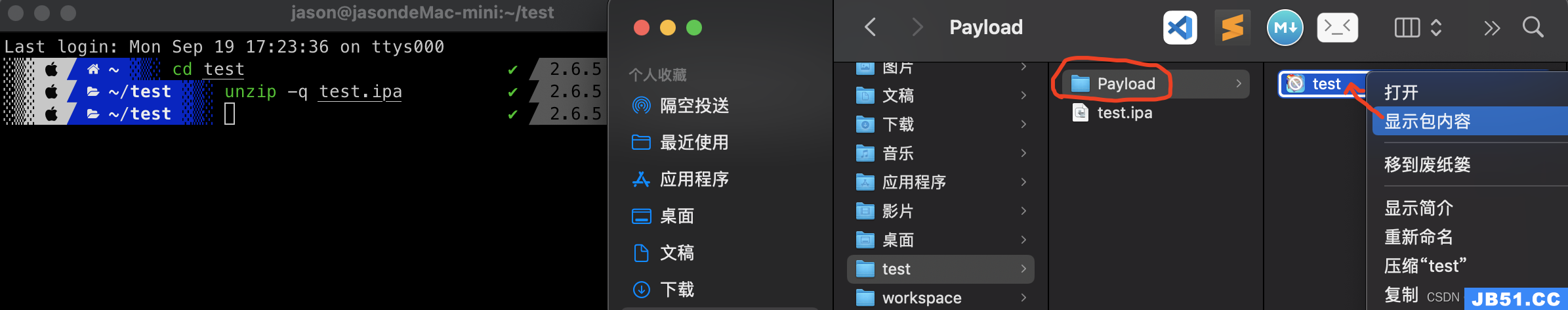

为了测试我在scaleRect()中执行的缩放比例,我决定使我的ImageView大小与我的图像大小相同.然后我打印了缩放之前和之后的坐标.我会认为,因为它们是一样的,我的缩放是正确的.这是代码:

var tet = image.detectRect()

//Before scaling

print(tet.topLeft)

print(tet.topRight)

print(tet.bottomRight)

print(tet.bottomLeft)

print("**************************************************")

//After scaling

tet = tet.scaleRect(image,imageView: imageView)

print(tet.topLeft)

print(tet.topRight)

print(tet.bottomRight)

print(tet.bottomLeft)

这是输出:

(742.386596679688,927.240844726562)

(1514.93835449219,994.811096191406)

(1514.29675292969,155.2802734375)

(741.837524414062,208.55403137207)

(742.386596679688,208.55403137207)

更新

为了尝试和缩放我的坐标,我再尝试了两件事情.

1号:

我已经尝试使用UIView convertPoint函数,以将点从图像转换为UIImageView.这是我如何编码:

我用scaleRect()函数替换了

let view_image = UIView(frame: CGRectMake(0,image.size.width,image.size.height)) let tL = view_image.convertPoint(self.topLeft,toView: imageView) let tR = view_image.convertPoint(self.topRight,toView: imageView) let bR = view_image.convertPoint(self.bottomRight,toView: imageView) let bL = view_image.convertPoint(self.bottomLeft,toView: imageView)

然后,我回到了这一点.

2号:

我根据图像和imageView的宽度和高度的差异,尝试了简单的坐标转换.代理代码:

let widthDiff = (image.size.width - imageView.frame.size.width) let highDiff = (image.size.height - imageView.frame.size.height) let tL = CGPointMake(self.topLeft.x-widthDiff,self.topLeft.y-highDiff) let tR = CGPointMake(self.topRight.x-widthDiff,self.topRight.y-highDiff) let bR = CGPointMake(self.bottomRight.x-widthDiff,self.bottomRight.y-highDiff) let bL = CGPointMake(self.bottomLeft.x-widthDiff,self.bottomLeft.y-highDiff)

更新

我也试过使用CGAffineTransform.码:

var transform = CGAffineTransformMakeScale(1,-1) transform = CGAffineTransformTranslate(transform,-imageView.bounds.size.height) let tL = CGPointApplyAffineTransform(self.topLeft,transform) let tR = CGPointApplyAffineTransform(self.topRight,transform) let bR = CGPointApplyAffineTransform(self.bottomRight,transform) let bL = CGPointApplyAffineTransform(self.bottomLeft,transform)

没有工作我不知道还有什么可以尝试的.请帮忙.这将不胜感激.谢谢!

解决方法

我做了一个自定义类来存储点并添加一些帮助函数:

//

// ObyRectangleFeature.swift

//

// Created by 4oby on 5/20/16.

// Copyright © 2016 cvv. All rights reserved.

//

import Foundation

import UIKit

extension CGPoint {

func scalePointByCeficient(ƒ_x: CGFloat,ƒ_y: CGFloat) -> CGPoint {

return CGPoint(x: self.x/ƒ_x,y: self.y/ƒ_y) //original image

}

func reversePointCoordinates() -> CGPoint {

return CGPoint(x: self.y,y: self.x)

}

func sumPointCoordinates(add: CGPoint) -> CGPoint {

return CGPoint(x: self.x + add.x,y: self.y + add.y)

}

func substractPointCoordinates(sub: CGPoint) -> CGPoint {

return CGPoint(x: self.x - sub.x,y: self.y - sub.y)

}

}

class ObyRectangleFeature : NSObject {

var topLeft: CGPoint!

var topRight: CGPoint!

var bottomLeft: CGPoint!

var bottomRight: CGPoint!

var centerPoint : CGPoint{

get {

let centerX = ((topLeft.x + bottomLeft.x)/2 + (topRight.x + bottomRight.x)/2)/2

let centerY = ((topRight.y + topLeft.y)/2 + (bottomRight.y + bottomLeft.y)/2)/2

return CGPoint(x: centerX,y: centerY)

}

}

convenience init(_ rectangleFeature: CIRectangleFeature) {

self.init()

topLeft = rectangleFeature.topLeft

topRight = rectangleFeature.topRight

bottomLeft = rectangleFeature.bottomLeft

bottomRight = rectangleFeature.bottomRight

}

override init() {

super.init()

}

func rotate90Degree() -> Void {

let centerPoint = self.centerPoint

// /rotate cos(90)=0,sin(90)=1

topLeft = CGPoint(x: centerPoint.x + (topLeft.y - centerPoint.y),y: centerPoint.y + (topLeft.x - centerPoint.x))

topRight = CGPoint(x: centerPoint.x + (topRight.y - centerPoint.y),y: centerPoint.y + (topRight.x - centerPoint.x))

bottomLeft = CGPoint(x: centerPoint.x + (bottomLeft.y - centerPoint.y),y: centerPoint.y + (bottomLeft.x - centerPoint.x))

bottomRight = CGPoint(x: centerPoint.x + (bottomRight.y - centerPoint.y),y: centerPoint.y + (bottomRight.x - centerPoint.x))

}

func scaleRectWithCoeficient(ƒ_x: CGFloat,ƒ_y: CGFloat) -> Void {

topLeft = topLeft.scalePointByCeficient(ƒ_x,ƒ_y: ƒ_y)

topRight = topRight.scalePointByCeficient(ƒ_x,ƒ_y: ƒ_y)

bottomLeft = bottomLeft.scalePointByCeficient(ƒ_x,ƒ_y: ƒ_y)

bottomRight = bottomRight.scalePointByCeficient(ƒ_x,ƒ_y: ƒ_y)

}

func correctOriginPoints() -> Void {

let deltaCenter = self.centerPoint.reversePointCoordinates().substractPointCoordinates(self.centerPoint)

let TL = topLeft

let TR = topRight

let BL = bottomLeft

let BR = bottomRight

topLeft = BL.sumPointCoordinates(deltaCenter)

topRight = TL.sumPointCoordinates(deltaCenter)

bottomLeft = BR.sumPointCoordinates(deltaCenter)

bottomRight = TR.sumPointCoordinates(deltaCenter)

}

}

这是初始化代码:

let scalatedRect : ObyRectangleFeature = ObyRectangleFeature(rectangleFeature)

// fromSize -> Initial size of the CIImage

// toSize -> the size of the scaled Image

let ƒ_x = (fromSize.width/toSize.width)

let ƒ_y = (fromSize.height/toSize.height)

/*the coeficients are interchange intentionally cause of the different

coordinate system used by CIImage and UIImage,you could rotate before

scaling,to preserve the order,but if you do,the result will be offCenter*/

scalatedRect.scaleRectWithCoeficient(ƒ_y,ƒ_y: ƒ_x)

scalatedRect.rotate90Degree()

scalatedRect.correctOriginPoints()

在这一点上,ScaleRect已经准备好以任何你喜欢的方式绘制.

版权声明:本文内容由互联网用户自发贡献,该文观点与技术仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 dio@foxmail.com 举报,一经查实,本站将立刻删除。